Air Piano using OpenCV and Python

Play the piano by just moving your fingers in the air!

My inspiration to make an “Air Piano”

Recently I visited my cousin and she had been trying to learn piano for quite some time. However, due to the pandemic, her teacher could not come home and they were practising through zoom meetings. It was then I dawned upon the idea of making a virtual piano that both her teacher and she could use to learn music. As I thought about this, I pondered why not move beyond the keyboard? Let’s try and make music out of thin air? Let our creative mind flow and make such an interaction that allows a person to play the piano by just moving the hands in the air?! It was then that I decided I will make an “Air Piano”.

Technical Description of the project:

Air Piano is a project made at the confluence of computer vision and human-computer interaction. To make Air Piano, I have used the Python language and a specialised library called OpenCV. It is an open-source computer vision and machine learning software library.

Another important library that helps us complete this project is the PyAutoGUI library. PyAutoGUI lets your Python scripts control the mouse and keyboard to automate interactions with other applications. PyAutoGUI has several features: Moving the mouse and clicking or typing in the windows of other applications, take screenshots, etc.

Now let’s understand the flow of the project:

- The first step is to capture the video stream input of the user.

- After we read the input frame by frame, we now need to convert the BGR scale to the HSV scale so that we can work with colours better.

Why do we convert to HSV colour space? The simple answer is that, unlike RGB, HSV separates luma, or the image intensity, from chroma or the colour information. In computer vision, you often want to separate colour components from intensity for various reasons, such as robustness to lighting changes, or removing shadows.

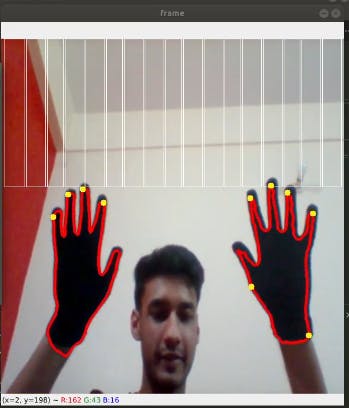

- The hand is detected using the black colour mask we created using the HSV scale. For this purpose, I chose to wear a pair of black gloves because detecting the skin colour was comparatively tougher and would have deprived the project of generalisation.

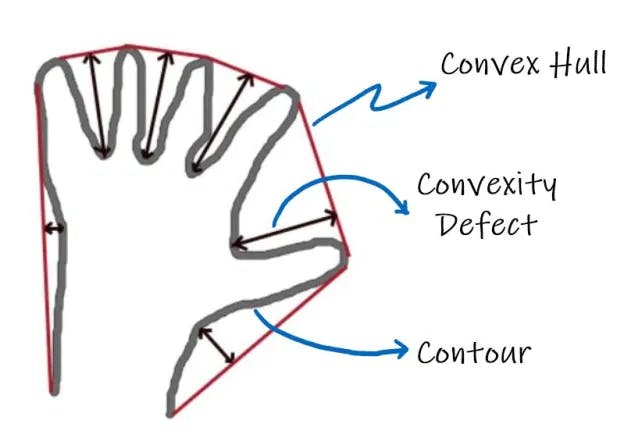

- After the hand is detected, we find the contours i.e. the boundary of our hand. We then draw a convex hull, to find the surrounding convex polygon. From this polygon, we extract the fingertips using the convexity defects function.

What is Convex Hull? The convex hull, the minimum n-sided convex polygon that completely circumscribes an object.

What are Convexity Defects? Any deviation of the contour from its convex hull is known as the convexity defect.

- There is also a filter applied to get just the fingertips using the distance between the points, i.e. the fingertip and the joint, while you may also choose to use the angle between the fingers to achieve the same.

- Before coming to the use of the PyAutoGUI function, let us draw the piano keys on our frame which will be our “Air Paino Keys”.

- The last part includes the use of the PyAutoGUI library which allows you to do the keyboard operations depending on the coordinates of your hand movements (fingertips to be precise). Now when this program is run, it will track the positions of the fingertips in the frame and automatically press the mentioned keys on the keyboard. To make this work, we will open another window with the link: https://www.onlinepianist.com/virtual-piano.

This is the virtual piano we will control using our fingertips.

THE FINAL RESULT LOOKED LIKE THIS:

That was the detailed technical description of the project “Air Piano”, and I hope you learned a lot through this. Visit the Github repo and check out the full code to get a better understanding. The following are the sources I used to learn and that helped me build this project successfully-

Future direction and use cases:

Developing on similar lines, a whole suite of instruments can be made gesture-controlled using the same principle as above, making a very interactive environment for learning music. We can add many more utilities and use state of the art developments(such as MediaPipe library) to this project more interesting.